Six things I wish I would've known when I started working with Istio

Service meshes and networking in Kubernetes have their tricks!

Working with service meshes is really an interesting concept and sells you the benefits of, well, the service mesh itself, mutual TLS, end-to-end encryption, and more.

Unfortunately though, not everything is as straightforward as you might think. In fact, Istio’s own documentation page has a full section dedicated to “common issues”. These issues are not so evident if you come from an Ingress Controller world and you assume you would understand Istio using the same knowledge as you would have when using Ingress Controllers instead.

Here are 6 things I wish I would’ve known when I started working with Istio!

Port names matter

The most obvious (or perhaps not so?) one that seems to hit me the most, even when I know they’re a nuisance to work through, is that in Istio, port names matter. In fact, they matter so much that routing traffic to your container depends a lot on the name of the given port.

Istio calls this “protocol selection” and, in short, it uses a format of $NAME[-$SUFFIX] where $NAME is a predefined protocol name, and the suffix, denoted by $SUFFIX, can be omitted or defined for better traceability – things like the Istio metrics can use this suffix and the port name to better display traffic stats.

There are 3 main ones to know in my opinion, although the list is a bit longer than that:

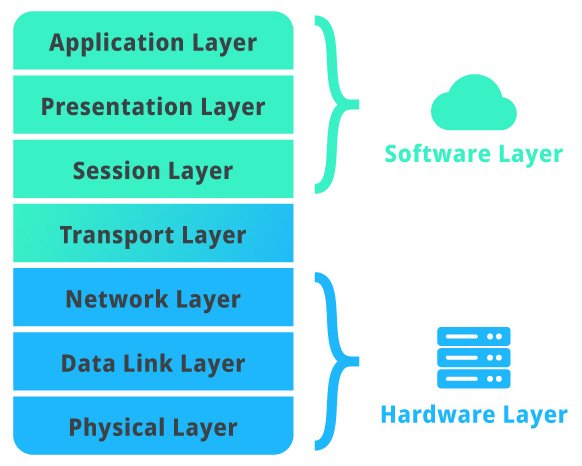

httpandhttp2which, strangely enough, both use either HTTP/1.1 or HTTP/2; and,httpsand/ortlsfor TLS-based traffic; and finally,tcpfor any traffic where you do not want the Layer 7 data to be sniffed or acted upon (more on this in the next point)

What these mean in practice is that it is okay to name your port http and get the commonly non-TLS traffic to your container routed to it. But providing a customized name, such as appport or something along those lines, is a no-no, since traffic might be handled differently by Istio if that were to happen.

There are a few exceptions, in fact, Istio ships with some aliases. One of the most common examples for aliases is the use of the gRPC. Since gRPC is a binary protocol, and considering that HTTP/2 is also a binary protocol thanks to its frames, a bit of Layer 7 data needs to be understood to handle it.. This is why Istio defines grpc as an alias of http2.

The downside is that it is quite common to find Kubernetes Helm charts that name their port web. I can’t blame them: web is vastly used as a port name from some of the early ages of “port-naming” in Kubernetes, and as such, it makes sense that someone who created a Helm chart or YAML manifest would name the port whatever they want, and web is as good as calling it even frontend.

However, this might present the nuisance that you might have TLS-termination at the container-level and as such, you’re expecting TLS traffic. Setting the value to web might break your TLS connectivity yielding some awkward error messages for your users. More about this in the next point.

TCP vs http/https traffic

The next thing I wish I would’ve known is the fact that tcp traffic is sent as-is to the container, without any Layer 7 data processing or sniffing, like header handling or similar, while other kinds of traffic – defined by the name as discussed in the previous point – could involve some additional handling.

This additional handling is called “HTTP Connection Manager”. This nifty tool allows you to perform some powerful routing and filtering based on headers, paths, and other data you can read from the request. It’s often part of the traffic management tooling in Istio, and one of the most significant sales points.

What this means in practice, and connection manager aside, is that if your application is expecting this kind of handling, then you must configure correctly not only the port names, but what kind of protocol it’s using – which yes, it’s given by the name, but there are subtle differences for example, between tcp traffic and some custom handlers like mongo, mysql or redis.

The best example I have is when I ran into an issue while testing some ArgoCD stuff. ArgoCD listens on the same port using different protocols via multiplexing by also sniffing the incoming data. By default, ArgoCD listens on 80 and 443 on the Kubernetes Service level, but both ports from the Service are sent to the same port on the container level. Due to this mismatch in naming format and/or what kind of content is expected at the port, ArgoCD could not receive traffic until one of the ports in the Service was removed and the second port renamed to tcp 🤷 see this Github issue for more context.

You might say “but Patrick, what ArgoCD is doing is not that common: most of the apps built out there often listen on HTTP/s and they perform no multiplexing” and you will be right! The only time when this will come and bite you back is when those special case scenarios, such as ArgoCD, show up in your day-2 operations.

Asynchronous order of startup of containers

The third issue you’ll likely face is that containers that are sensitive to network changes might not play well with Istio. Concretely, if your application is expecting some sort of networking from the getgo, you might need to fine-tune their start or even look to hook up readiness, liveness and startup checks – more about this later.

The best way to describe this is first by understanding that Istio works by injecting a sidecar container to all your deployments. This sidecar has a NET_ADMIN and NET_RAW capabilities, which basically allows the sidecar to hijack any traffic sent to the pod so it’s routed through the sidecar instead of going directly to your container: that’s how Istio achieves things like Mutual TLS, for example.

The bad news about this is that the Envoy sidecar might not be faster than your app to boot, and if Envoy is supposed to hijack traffic but it’s not ready, it can produce some subtleties in your application startup. Not only that, Envoy hijacks incoming traffic, but it hijacks outgoing traffic as well! Now, there are ways to control what it can do with the hijacked traffic in the form of ServiceEntries but that’s probably a discussion for another time 😁.

The best way to avoid, in your app, these network hiccups produced by Envoy coming to the picture is either delaying the start of your application – there are multiple options here such as letting it crash then hopefully networking will be ready when the container starts the second time, or replacing the command of your Deployment but these are just hacks – or configuring Istio to hold the application until the proxy has started.

The later can be achieved by adding an annotation to your deployment:

annotations:

proxy.istio.io/config: '{ "holdApplicationUntilProxyStarts": true }'Keep in mind that it seems support for this annotation exists only since Istio 1.7. For older versions, you would have to resort to what was discussed before: I saw a nifty trick that replaces the entrypoint of your container with a short command that pings the /healthz endpoint of Envoy. If the endpoint responds, it means the proxy is ready and your container can start:

until [ $(curl --fail --silent --output /dev/stderr --write-out "%{http_code}" localhost:15020/healthz/ready) -eq 200 ]; do

echo Waiting for proxy...

sleep 1

doneThe quirk of this solution is that it may not work for you if you’re using a distroless or scratch container. It also requires bundling this into the container as a bash script or passing it as a one-liner in your YAML code and ensuring curl is installed. Not as straightforward but hey! Might work for you 😄.

It’s possible to also set this installation-wide by setting it as a configuration option. Istio’s own documentation explains how.

Outgoing traffic and ServiceEntries

We briefly touched on this in the previous point, so the TL;DR here is that if your application also performs outgoing traffic, this traffic is also proxied by Envoy/Istio. As such, you need to make sure it works as expected and that Istio recognizes it so it doesn’t get mangled, like the connection being unexpectedly closed – especially in databases!

It seems the core reason here is that some services might use host-based routing – as in, reading the Host: header. Istio might strip that header in some cases and/or some load balancers might misbehave in some other scenarios.

The best way to avoid this is using a ServiceEntry and configuring it so the endpoint you’re trying to connect to is defined as external to the mesh. For example, this code is taken verbatim from the Istio issue linked in the first paragraph, and it configures access to a SQL database by its IP:

service-entry.yamlapiVersion: networking.istio.io/v1alpha3

kind: ServiceEntry

metadata:

name: cloud-sql-instance

spec:

hosts:

- 104.155.22.115

ports:

- name: tcp

number: 3307

protocol: tcp

location: MESH_EXTERNAL

resolution: DNSThe highlighted lines show the IP we’re trying to connect to – which can be an FQDN – and the location marked as “external to the mesh”. Other services might also need these ServiceEntries if they happen to misbehave 😉.

Healthcheck hijack/rewrite

This one isn’t a problem per se, but more or less caught me off guard: Istio rewrites the healthcheck endpoints of your application to different endpoints. There’s a good reason behind this, I mean consider what we know:

- Envoy hijacks all traffic from the Pod

- Kubernetes needs to perform readiness/liveness/startup checks by, if configured, calling HTTP endpoints

- If Kubernetes tries to connect to your container inside the Pod, the request will come through Envoy

To avoid marking a container/pod unhealthy just because we can’t connect to it via strict TLS, for example, Istio rewrites the healthcheck process and includes logic to allow the kubelet to perform the HTTP call to your container to check for readiness and liveness. The whole process is better documented than what I can do here in the official Istio docs for Health Checks.

When looking at your logs, or the Envoy logs, consider remembering this if you see an awkward endpoint being called that your application has never had before.

You can disable health check rewrites by adding the following annotation to your Pod, but keep in mind you might want to keep them on 😉:

annotations:

sidecar.istio.io/rewriteAppHTTPProbers: "false"This issue does not exist, evidently, if your checks are commands and not HTTP calls.

Can’t tcpdump the Envoy sidecar

And the last one, quite simple yet quite interesting too, is the fact that it’s not possible to run tcpdump on the Envoy sidecar with the error:

You don't have permission to capture on that deviceThe reason is simple and honestly it has nothing to do with Envoy, but with the reduced permissions of Istio’s Envoy container which isn’t running as root and as such, it can’t capture on the network card. It’s possible to configure the proxy to run as root by setting the value values.global.proxy.privileged=true in your Istio configuration. After that, you’re free to run sudo tcpdump away! Make sure to disable the privileged mode once you’re done.

That’s it! I’m sure there are several other issues that might be prevalent for newcomers to Istio, however, the ones listed above are the ones I’ve seen the most in my day-to-day endeavours!

Any corrections/objections? Leave me a comment and I’ll take care of it as soon as possible!